The making of conversational UI: prompting/clicking or both?

What’s faster: clicking around in an interface, or prompting an AI to act on your behalf?

One of the simplest questions about the future of software is also the most revealing:

What’s faster: clicking around in an interface, or prompting an AI to act on your behalf?

At first glance, it feels obvious: AI should win. Why waste time dragging blocks or formatting text when you could just ask for it?

But when you zoom out, speed isn’t just about how fast the software responds. It’s about how quickly you can iterate toward the result you actually want.

And in that sense, the future isn’t a battle between prompting and UI - it’s a partnership.

Two Kinds of Thinking

Most of what we do inside software falls into one of two modes.

Type 1: Execution.

These are mechanical, operational tasks, formatting a slide, adding rows to a spreadsheet, moving blocks in Notion. They’re structured, predictable, and visual. You don’t need creativity; you need control. And here, the mouse wins. Clicking through a UI is faster, less effortful, and cognitively lighter.

Type 2: Organization and Strategy.

This is the messy stuff, turning a brain-dump into a meeting summary, clustering ideas after a brainstorming session, or shaping raw notes into an outline. It’s abstract and unstructured. Here, prompting shines. You can ask an AI to “make sense of this chaos,” and it will - faster than you ever could manually.

This idea isn’t new, it echoes psychology’s classic distinction between System 1 and System 2 thinking, introduced by Daniel Kahneman. System 1 is fast, intuitive, and automatic, like clicking through familiar actions without much thought. System 2 is slow, deliberate, and analytical; the mode we enter when we need to organize, plan, or reason through messy problems.

Software today forces you to toggle manually between these modes, and the next generation will adapt to whichever kind of thinking you’re doing in real time.

Clicking is best for precision; prompting is best for sense-making.

The Hybrid Workflow

The real magic happens when you stop thinking of prompting versus UI, and start moving fluidly between them.

You might ask AI to clean up your notes (a Type 2 task), then jump back into the interface to reorder or refine details (Type 1).

Each mode plays to the other’s strengths.

This back-and-forth, prompt, click, prompt again, becomes the new loop of productivity.

When Prompts Become Buttons

Over time, patterns emerge. You might find yourself typing the same prompt every morning:

“Show me my calendar events today.”

At some point, the system should notice.

Typing the same thing every day is slower than clicking once. So the prompt evolves into a button - “Show Calendar” - waiting for you next time you open the app.

That’s the principle: repetition should generate UI.

Prompts are where discovery begins; buttons are where efficiency stays.

When Conversation Becomes Interface

Strategic, novel tasks will always begin as conversation. Routine, predictable tasks will always crystallize into UI.

The future interface blends both, a living surface that learns from how you think.

Conversation spawns new UI elements.

UI, in turn, accelerates your next conversation.

It’s not about talking to software anymore; it’s about software that talks with you.

The Human–Computer Loop

What this hybrid model really optimizes is the mental loop itself:

Thought → Interaction → Thought.

You externalize your messy ideas through a prompt.

You refine them through the UI.

You see the outcome, learn from it, and your next thought gets sharper.

It’s a cycle of cognitive offloading, software adapting to human thought, not the other way around.

The Big Picture

We’re heading toward software that can think ahead of us.

Conversational AI will be embedded directly inside interfaces.

You’ll move between prompting and clicking depending on what’s faster in the moment.

Over time, your software will:

Learn which actions you repeat

Turn them into shortcuts

Let you spend prompts on the high-level, creative stuff

The interface will evolve with you, each prompt giving birth to a new button, each button freeing up mental space for bigger ideas.

Where Autoplay Comes In

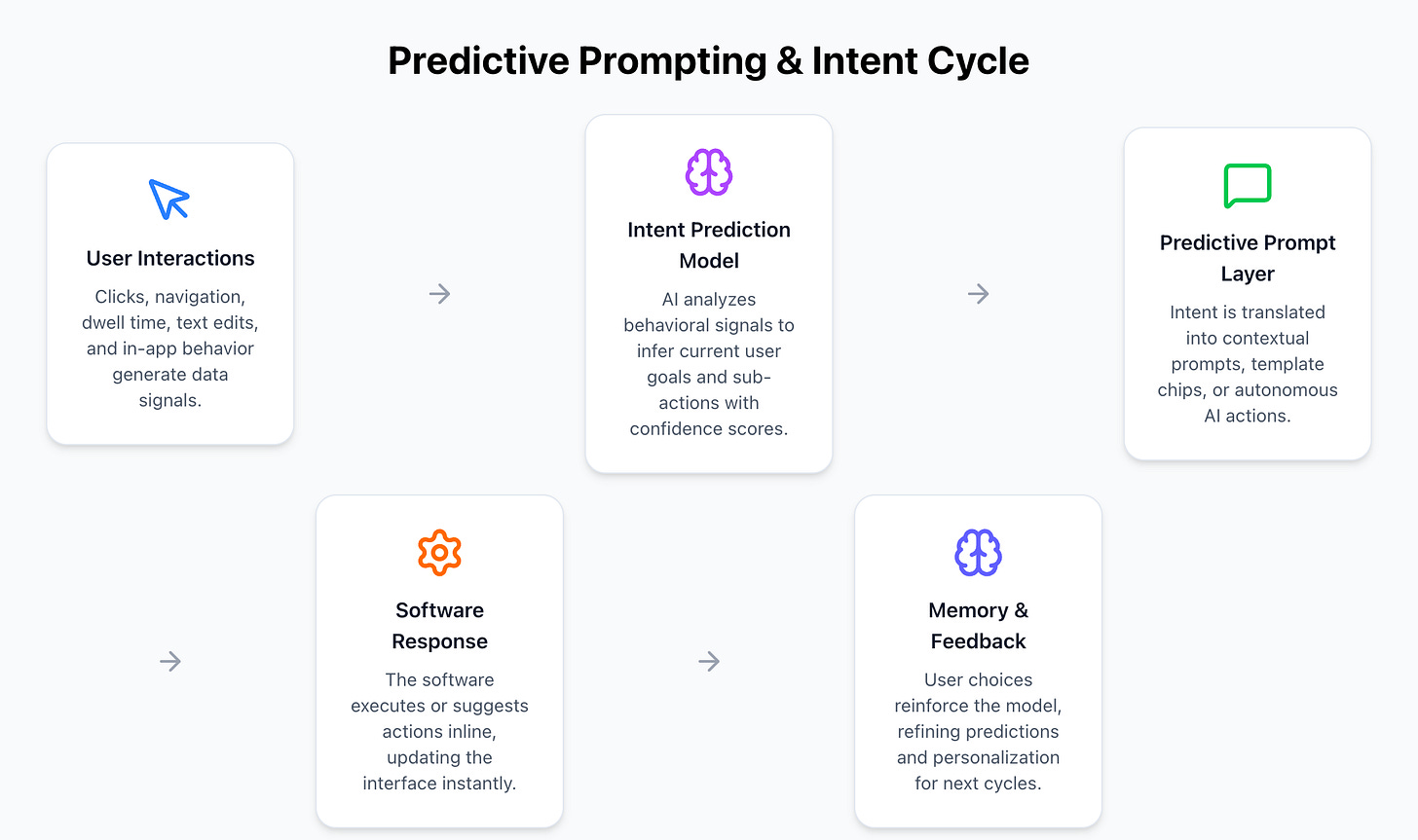

At Autoplay, we think the next leap is before the prompt.

We’re building a pre-intent layer - a system that predicts what you’re trying to do before you have to ask.

It reads behavioral context (clicks, hesitations, navigation paths) and infers intent in real time:

“Designing a presentation.” “Analyzing churn.” “Inviting a teammate.”

From there, it can surface contextual prompts, or take the action automatically.

If you hover over a chart, it might whisper: “Explain this?”

If you reformat slides for the third time, it might offer: “Want me to make them consistent?”

The assistant learns your rhythm, adapts its behavior, and eventually begins prompting the software on your behalf.

Measuring the Shift

This isn’t just UX polish. It’s cognitive optimization.

By predicting intent, we remove friction at three levels:

Intent formulation: you don’t have to decide what to ask.

Prompt translation: you don’t have to phrase it perfectly.

Iteration: you don’t have to re-prompt endlessly - the system adjusts automatically.

The result: faster task completion, fewer prompts, and lower mental load.

The Endgame

The future of software isn’t about choosing between conversation and control.

It’s about merging them; turning clicks into language, and language into action.

When AI can infer what you mean before you say it, prompting becomes invisible.

Software finally starts to feel like thought.

That’s where we’re headed: