How to Hypothesis Test Your Way to Better Adoption

Product adoption doesn’t just “happen.” It’s the result of understanding what users are trying to achieve, spotting where they struggle, and systematically testing ways to help them succeed.

The challenge? You can’t improve adoption without knowing whether your ideas actually work. That’s where hypothesis testing comes in - not the vague “let’s try this” kind, but a structured, evidence-driven process that links user intent to measurable behaviour changes.

Below is a step-by-step framework to turn hunches into confident product decisions, using tools like Autoplay for session replays, natural-language search, and golden path tracking.

Step 1: Start with User Intent, Not Features

Before you test anything, clarify what your users are trying to do - not just what you want them to do.

Example:

A marketing automation platform notices many trial users visit the pricing page during onboarding but don’t start a trial.

We dig into intent signals:

Session replays in Autoplay show multiple users clicking between pricing tiers and the “Start Free Trial” button without committing.

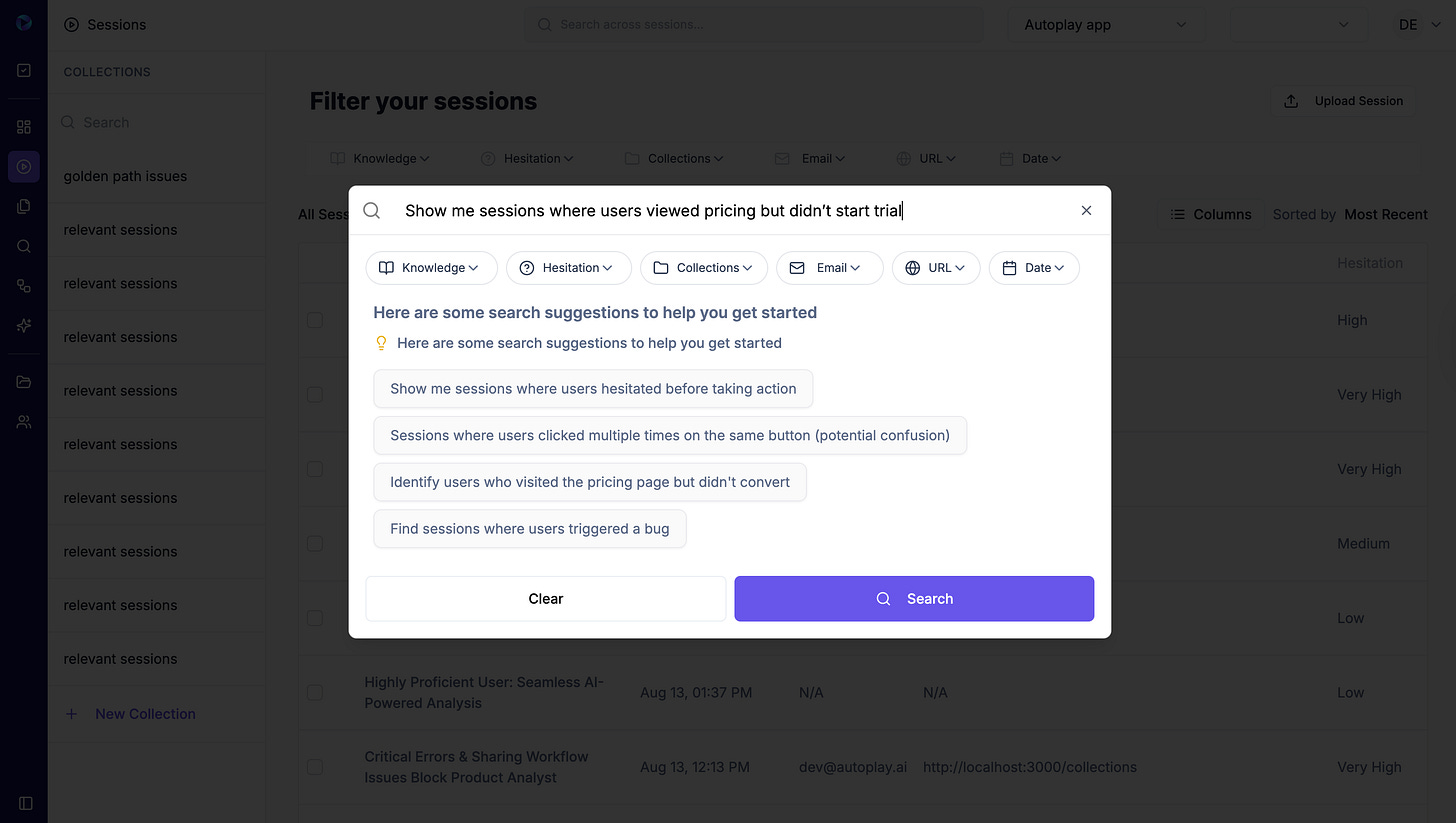

Natural-language search in Autoplay:

“Show me sessions where users viewed pricing but didn’t start trial”Chatbot logs reveal repeated questions: “Which plan should I choose?” and “Do I have to pay now?”

Customer interviews confirm: users are unsure if they can try all features without paying upfront.

The output: Users’ intent is to explore the product risk-free, but they’re confused about which option gets them there.

Step 2: Map Your Golden Path

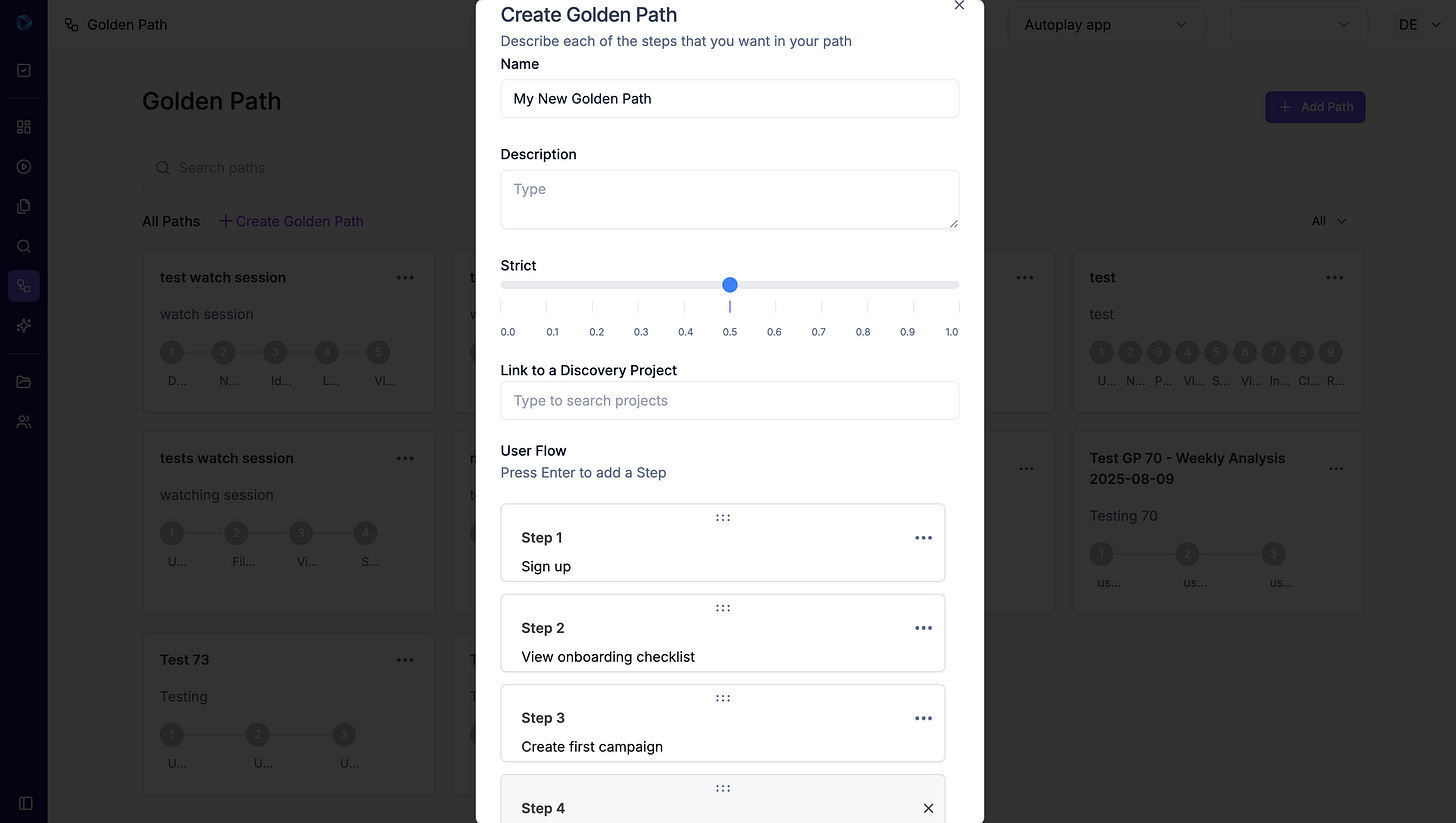

The Golden Path is your ideal journey from first touch to activation.

For this product:

Sign up

View onboarding checklist

Create first campaign

Schedule campaign

When we overlay replays, we find a big drop-off after “view pricing page,” meaning users are deviating from the Golden Path before they even get started.

Step 3: Turn Friction Into a Testable Hypothesis

Looking at the evidence, we write a hypothesis:

For first-time self-serve users, replacing the pricing page during onboarding with a single “Start Free Trial” CTA that includes all features will increase trial starts by 10% in two weeks because replays show confusion and backtracking between pricing tiers.

Step 4: Design the Experiment

Control group: Sees the current three-tier pricing page.

Variant group: Sees a single, clear CTA with “Start Free Trial – All Features Included.”

Primary metric: Trial start rate from onboarding flow.

Guardrail metrics: Support tickets, time to first campaign, Golden Path completion rate.

Replay plan: Create two Autoplay collections - “Control Trials” and “Variant Trials” - to review how each group behaves.

Step 5: Run and Monitor

During the two-week test:

Daily replay check using Autoplay’s natural-language search:

“Variant users who rage-clicked on trial CTA”

“Control users who returned to pricing page 2+ times”

Monitor funnel data for trial starts and guardrails.

Step 6: Analyse the Results

Outcome:

Trial start rate increased by 12.4% in the variant.

Golden Path completion rate (sign up → campaign scheduled) improved by 8%.

Support tickets about “which plan to choose” dropped by 36%.

Replay review: Variant users clicked the CTA once and moved straight into onboarding; control users often bounced between tiers, reading fine print, and in some cases abandoning entirely.

Step 7: Decide and Roll Out

The change meets all success criteria. The team ships the simplified trial CTA to all users and updates the Golden Path definition to reflect the new flow.

Why This Works

Hypothesis testing for adoption isn’t just about changing buttons or layouts. It’s about:

Starting with user intent - what they came to do.

Watching actual behaviour - how they try to do it.

Testing changes that reduce friction between the two.

Proving impact with both numbers and real-world evidence from replays.